For decades, the scientific method taught in classrooms has followed a neat, linear path: observe, hypothesize, test, conclude. This hypothesis-driven approach has become so deeply embedded in our understanding of “real science” that research proposals without clear hypotheses often struggle to secure funding. Yet some of the most transformative discoveries in history emerged not from testing predictions, but from simply looking carefully at what nature had to show us.

It’s time we recognize exploratory science—sometimes called discovery science or descriptive science—as equally valuable to its hypothesis-testing counterpart.

What Makes Exploratory Science Different?

Hypothesis-driven science starts with a specific question and a predicted answer. You think protein X causes disease Y, so you design experiments to prove or disprove that relationship. It’s focused, efficient, and satisfyingly definitive when it works.

Exploratory science takes a different approach. It asks “what’s out there?” rather than “is this specific thing true?” Researchers might sequence every gene in an organism, catalog every species in an ecosystem, or map every neuron in a brain region. They’re generating data and looking for patterns without knowing exactly what they’ll find.

The Case for Exploration

The history of science is filled with examples where exploration led to revolutionary breakthroughs. One of my lab chiefs at NIH was Craig Venter, famous for his exploratory project: sequencing the human genome. The Human Genome Project didn’t test a hypothesis—it mapped our entire genetic code, creating a foundation for countless subsequent discoveries. Darwin’s theory of evolution emerged from years of cataloging specimens and observing patterns, not from testing a pre-formed hypothesis. The periodic table organized elements based on exploratory observations before anyone understood atomic structure.

More recently, large-scale exploratory efforts have transformed entire fields. The Sloan Digital Sky Survey mapped millions of galaxies, revealing unexpected structures in the universe. CRISPR technology was discovered through exploratory studies of bacterial immune systems, not because anyone was looking for a gene-editing tool. The explosive growth of machine learning has been fueled by massive exploratory datasets that revealed patterns no human could have hypothesized in advance.

Why Exploration Matters Now More Than Ever

We’re living in an era of unprecedented technological capability. We can sequence genomes for hundreds of dollars, image living brains in real time, and collect environmental data from every corner of the planet. These tools make exploration more powerful and more necessary than ever.

Exploratory science excels at revealing what we don’t know we don’t know. When you’re testing a hypothesis, you’re limited by your current understanding. You can only ask questions you’re smart enough to think of. Exploratory approaches let the data surprise you, pointing toward phenomena you never imagined.

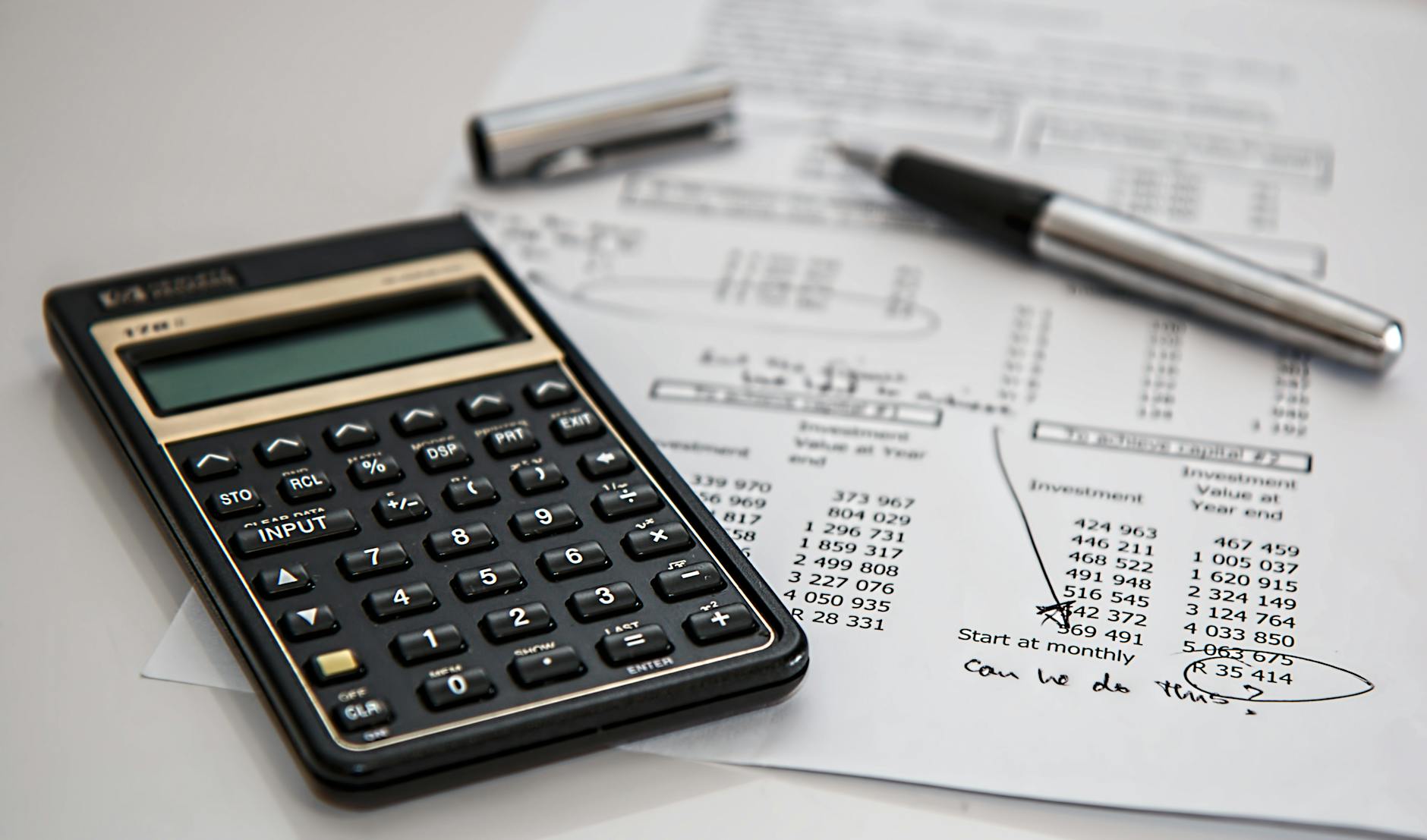

This is particularly crucial in complex systems—ecosystems, brains, economies, climate—where interactions are so intricate that predicting specific outcomes is nearly impossible. In these domains, careful observation and pattern recognition often outperform narrow hypothesis testing.

The Complementary Relationship

None of this diminishes the importance of hypothesis-driven science. Testing specific predictions remains essential for establishing causation, validating mechanisms, and building reliable knowledge. The most powerful scientific progress often comes from the interplay between exploration and hypothesis testing.

Exploratory work generates observations and patterns that inspire hypotheses. Hypothesis testing validates or refutes these ideas, often raising new questions that require more exploration. It’s a virtuous cycle, not a competition.

Overcoming the Bias

Despite its value, exploratory science often faces skepticism. It’s sometimes dismissed as “fishing expeditions” or “stamp collecting”—mere data gathering without intellectual rigor. This bias shows up in grant reviews, promotion decisions, and journal publications.

This prejudice is both unfair and counterproductive. Good exploratory science requires tremendous rigor in experimental design, data quality, and analysis. It demands sophisticated statistical approaches to avoid false patterns and careful validation of findings. The difference isn’t in rigor but in starting point.

We need funding mechanisms that support high-quality exploratory work without forcing researchers to shoehorn discovery-oriented projects into hypothesis-testing frameworks. We need to train scientists who can move fluidly between both modes. And we need to celebrate exploratory breakthroughs with the same enthusiasm we reserve for hypothesis confirmation.

Looking Forward

As science tackles increasingly complex challenges—understanding consciousness, predicting climate change, curing cancer—we’ll need every tool in our methodological toolkit. Exploratory science helps us map unknown territory, revealing features of reality we didn’t know existed. Hypothesis-driven science helps us understand the mechanisms behind what we’ve discovered.

Both approaches are essential. Both require creativity, rigor, and insight. And both deserve recognition as legitimate, valuable paths to understanding our world.

The next time you hear about a massive dataset, a comprehensive catalog, or a systematic survey, don’t dismiss it as “just descriptive.” Remember that today’s exploration creates the foundation for tomorrow’s breakthroughs. In science, as in geography, you can’t know where you’re going until you know where you are.